6 minute read

Looking to build scalable, trustworthy AI into your product? Start with AI pilots , small, high-impact experiments that uncover risks and prove what works before you scale.

Dummies save lives. Specifically, crash dummies. Those plastic bodies bolted into car seats are actually sophisticated technology that influences how we build the safety features we’ve come to expect in vehicle design.

Just like the once popular crash test dummy commercials said, you can learn a lot from a dummy. Thanks to them, seatbelts improved, airbags were added, and cars started to crumple in the right places instead of crushing passengers.

Just like a crash dummy reveals hidden dangers before cars hit the road, a well-built AI product pilot uncovers potential flaws before scaling.

Once an organization aligns a new AI offering around customer problems and company goals, you’re ready to assemble a pilot that focuses on answering two critical questions:

● Phase 1: Will the product or feature work?

● Phase 2: What’s the risk?

Will the product or feature work?

Pilots should balance between a sandbox for testing the viability of a product or feature idea and factors that simulate the real world.

In other words, it needs to have enough guardrails to give it a chance and a controlled environment so your teams can closely observe, document and analyze what’s happening.

Practically, this means carefully choosing participants who truly represent your product’s real users and diverse use cases, like:

● A mixture of active and daily engaged users and infrequent users.

● Data sets with a little noise, rather than sanitized, overly simplified test sets.

● Customers with varying levels of technological comfort or familiarity with your product.

During the pilot phase, a product leader’s primary role is asking, “Are we actually solving the intended problem in a meaningful way?”

You’ll also:

● Help your team prioritize features based on value, complexity and feasibility by identifying trade-off decisions between must-have and nice-to-have capabilities.

● Continuously manage expectations with internal stakeholders by transparently sharing progress and challenges. And remember, when AI and ML is involved, there is a higher level of education needed. That makes this step more challenging than it is for a typical project.

● Advocate for a mindset of experimentation, learning and adaptation rather than an immediate answer to “Did this succeed or fail?

What’s the risk

There’s a temptation during pilots to create an environment designed for success and then get prematurely excited when it does succeed. That’s just being human, and it is exciting. However, the pilot should include a second phase after viability: stress testing.

A pilot isn’t just feasibility; it’s your first risk assessment.

Imagine if we put our crash dummies in a car traveling at safe speeds with no accidents as proof of safe design. It wouldn’t be too terribly helpful. Instead, we put them in the worst possible situations to understand risk.

The pilot should first determine if the idea works in a realistic environment, and then you should ask, “What if everything that could go wrong did go wrong?

Hackers for Hire: Google has a dedicated team for this kind of work: the Red Team. It was created in 2016 to improve security.

As Google explains, “The main point is for the red team to emulate the same behaviors a real threat actor would use in a real-world scenario to gain a foothold and remain undetected. Identifying vulnerabilities and other limitations can help us zero in on the specific technical elements that make attacks successful, so we can extract them, study them, and implement solutions that make them less effective.”

Identifying Product Risk with a Pre-Mortem

How do we know what could go wrong? You could make your best guess about the highest risks, but a better answer might come from a pre-mortem with internal teams like security, ops, and support teams. You can even us AI for your pre-mortem; it is a great thought-partner and can be especially helpful in a risk-mitigation session

Ask them to imagine the project failing when it moved from 1,000 users or queries a day to 10,000. Then, have them theorize what might have caused the failure. They might find areas of concern based on their expertise, like:

1. Systems that talk to each other only in the lab

The pilot pulls data from one clean spreadsheet. In production, it must sync with four live systems, each on a different update schedule.

2. Performance that crumbles under real traffic

A model that answers 200 queries a day is fine on a single server. At 20,000 queries, it needs load‑balancing, caching, and new alert rules.

3. Rules and people you didn’t meet in pilot mode

Security asks for a formal pen test. Compliance wants an audit trail. The help desk needs a script for angry callers.

It’s possible none of this showed up in the first phase of the pilot.

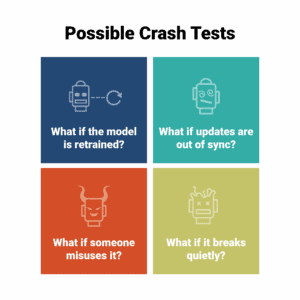

Possible Crash Tests

Consider creating a separate sandbox, but instead of building it to test if the idea will work, try to make it fail. Identify your biggest risk concerns and then simulate them with the same focus on what the real world could look like, but in unfavorable conditions.

1. What if the model is retrained or updated?

Do key behaviors change? Does it forget critical features or reintroduce bugs? Check what happens to historical consistency and user expectations.

2. What if your model, data pipeline, or dependencies don’t update together?

Intentionally mismatch versions and see where breakdowns occur in processing, logging, or UI.

3. What if someone intentionally misuses the system?

Try prompt injection, adversarial inputs, or permission abuse. Can users manipulate outputs, flood content, or access restricted features?

4. What if the product breaks quietly and no one notices?

Disable logging, reduce alert thresholds, and see how long it takes for someone to catch and respond to errors.

Example: What a Test Design Might Look Like

Goal: Test how much poor-quality data your model can handle before performance drops below acceptable levels, and identify which types of messiness are most harmful.

Step 1: Break down what low-quality data looks like in your context: missing values, duplicates, inconsistent formatting, typos, slang, irrelevant data.

Step 2: Start with your clean dataset. Then gradually introduce messiness in controlled increments: 5% noise, 10% noise, 25%, 50%, 75%, etc.

Step 3: Define the “breaking point” where the model is no longer usable.

Step 4: Document the total percent of messiness it can handle before performance dips significantly, and/or the type of error it’s most sensitive to (e.g., label errors vs. missing values)

Advanced Stress Testing Thought Exercises

If you want to do more in-depth planning for the future, you can facilitate thought experiments like the ones Jane McGonigal explains in her book Imaginable. She encourages paying attention to signals, trends, emerging tech, or small changes in behavior that might grow over time.

It’s not just creative thinking. It’s a structured way to stress-test your assumptions. McGonigal talks a lot about urgent optimism, or the belief that you can make a difference in the future, and her exercises help you feel more prepared.

For example:

● What’s one thing your team assumes will stay the same? Now, imagine the opposite happens. What breaks in your product, your process, or your customer relationship?

● What happens if a vendor you’ve partnered with on the project shuts down their business? Do you lose your data? Do you lose your model? Do you have a contingency plan?

● What is one law or regulation that could change everything? How could it make your product better, worse, or illegal?

The Crash Dummy’s Final Lesson

Crash dummies aren’t valuable because they survive crashes; it’s because they don’t.

If your AI pilot is your crash dummy, the goal isn’t keeping the product or feature safe in the sandbox. It’s knowing exactly where you have risk and building a plan to respond. It’s this kind of work that will earn trust not just with leadership but also with your most skeptical customers.

It’s also a story of iterative, intentional cycles of controlled failure and targeted improvement.

AI isn’t predictable; pilots like these are designed to manage complexity, and the approach isn’t new. In the early 2000s, IBM’s Director of the Cynefin Centre, Dave Snowden, introduced what he called safe-to-fail probes to cope with the inherent uncertainty of adaptive systems. It was used with clients like the UK Ministry of Defense to improve life fire exercises for soldiers, a large Asian-Pacific bank for customer retention and a hospital to find strategies to reduce nurse workload.

His approach was straightforward:

● Run many small probes.

● Watch what fails and why.

● Amplify what works, fix or discard what doesn’t.

Treat your AI pilot the same way. Break it on purpose, learn fast, adapt, and then you’re better prepared to start scaling.

Additional AI Resources for Product Professionals:

Stop Chasing AI Hype: Start Solving Market Problems

Leveraging AI to Improve Market Research (Podcast)

Building the Products of the Future with AI with Google DeepMind’s Jonathan Evens (Podcast)

Author

-

The Pragmatic Editorial Team comprises a diverse team of writers, researchers, and subject matter experts. We are trained to share Pragmatic Institute’s insights and useful information to guide product, data, and design professionals on their career development journeys. Pragmatic Institute is the global leader in Product, Data, and Design training and certification programs for working professionals. Since 1993, we’ve issued over 250,000 product management and product marketing certifications to professionals at companies around the globe. For questions or inquiries, please contact [email protected].

View all posts