The future belongs to companies that embrace responsible AI as a competitive edge – where trust, transparency and thoughtful design fuel lasting innovation. Read on to see how your team can lead the way.

Good AI isn’t always fast. It’s why we’re willing to wait minutes (instead of just seconds) for deep research features on ChatGPT, Claude, or Perplexity. We trust that those extra minutes mean the tool is doing something… more, and we hope it leads to better, more reliable outputs.

But in the long run, the best AI will be responsible. The challenge is that many product teams aren’t sure what “responsible AI” means.

A study from the University of Berkeley, involving 25 interviews and a survey of 300 product managers, found that teams often assume that the responsibility and accountability of AI aren’t their department. PMs even hesitate to raise issues or ask questions about responsible AI because they worry they’ll be labeled as “troublemakers.”

To complicate things even more, 19% of respondents said not only were there no clear incentives to use AI responsibly, but pressures around speed-to-market often took priority over risk mitigation and potential ethical considerations.

And all this matters because responsible AI is how we build trust with customers and cultivate long-term resilience into our products.

A cautionary tale:

In 2021, Zillow made a big bet on AI. The company launched a tool designed to estimate home values, so they could make instant offers and scale purchasing decisions. But speed came at the expense of accuracy. Home pricing, it turns out, is as much art as science, and the model wasn’t flexible enough to navigate the complexity.

The algorithm didn’t just miss, it consistently overestimated values, and not just by a little.

They damaged consumer trust (a long-term consequence that’s hard to measure), lost $304 million just in Q3 and had to lay off about 2,000 employees.

“When we decided to take a big swing on Zillow Offers three and a half years ago, our aim was to become a market maker, not a market risk taker, underpinned by the need to forecast the price of homes accurately three to six months into the future…

We have determined this large scale would require too much equity capital, create too much volatility in our earnings and balance sheet, and ultimately result in far lower return on equity than we imagined.”

— Zillow’s 2021 Q3 Shareholder Letter signed by Rich Barton, co-founder & CEO; Allen Parker, CFO

Zillow was acting based on a silent belief: With machine learning and AI, we can accurately forecast housing prices months in advance across thousands of markets.

Responsible AI requires identifying those silent beliefs and leadership that’s willing to invite and incentivize their teams to challenge assumptions.

In this situation, those questions might be:

“Are we treating a forecast like a fact?”

“Can our model adapt to local knowledge, like new zoning laws or construction projects?”

“Are our pilot markets representative of the national portfolio?”

Of course, hindsight is always clearer. But responsible AI isn’t about predicting every failure. It’s about creating the conditions where those critical questions can be asked before the damage is done.

For most product teams, the barrier to using AI responsibly isn’t apathy; it’s uncertainty about who leads the work and how it gets done.

That’s why we’ve curated a list of responsible AI principles and outlined clear, practical ways your product team can start this work.

What is Responsible AI?

Does your company have a definition for responsible AI? Does your product team? If not, this is the starting point for your conversations.

We ran a poll on LinkedIn asking, “If your team were asked how your company defines ‘responsible AI,’ could everyone confidently answer?”

- 12% said yes, and 21% said some could.

- The other 67% said that even if they could define it for themselves, they couldn’t confidently define it for the company.

Responsible AI has two parts:

- First, it involves compliance with regulations and laws relevant to your industry and location

- Second, it means figuring out how you should incorporate AI. That includes thoughtful choices in how AI is deployed in products so it serves customers without sacrificing the common good and identifying AI tools that make life better for your employees, not worse.

Rather than a static definition, most companies, governing bodies, and advocacy groups approach responsible AI as a set of guiding principles. For example, the Future of Life Institute outlined 23 (aka Asilomar AI Principles) in 2017. Microsoft chose six principles, while Salesforce identified five.

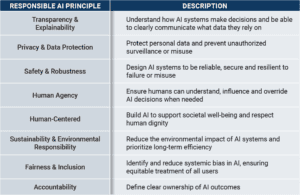

While each company may have a slightly different interpretation of what will support the common good based on industry, use cases, etc., there are some consistent themes:

Principles guide the work when the stakes are high and the answers aren’t obvious, but a definition isn’t the finish line, and it shouldn’t be handed down from the top.

So the real decision isn’t selecting your principles. It’s who is going to join the conversation, and how they’ll work to put them into practice.

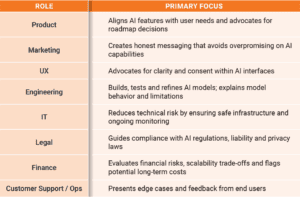

Who’s responsible for how AI is used?

If the answer is “everyone,” you won’t have the accountability needed for progress. However, it’s never just one person’s job.

Instead, focus on creating a multidisciplinary working group that includes people from different departments and levels of the organization.

Option 1: In a Perfect World

Your company might hire its first Chief AI Ethics Officer (CAIO), like when Kay Firth-Butterfield was hired as the first CAIO… ever… in the world, in 2014. Appointing an executive-level leader to spearhead AI ethics would be ideal, but it’s often not feasible. Regardless, you’ll want someone clearly accountable and capable of influencing policy and change.

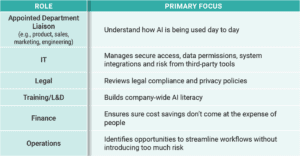

The rest of the team might look a little different depending on whether you’re building AI for your products or adding it to your workflows, but it is likely to include:

If you’re building and deploying AI Products

If you’re using AI tools internally (AI agents, Automation, LLMs, etc)

Option 2: Okay, maybe we start small…

You might be thinking, “Listen, I’m just one product leader, I can’t launch a full-scale, company-wide initiative. I simply want to improve how my team uses AI, and maybe set a good example for other departments.”

…fair.

You’ll still start by identifying who will join an AI working group. Your group may be smaller, but your goal is still to gather diverse voices and experiences around the table.

If your department is small enough, it’s possible that the entire team can participate in monthly responsible AI discussions. In this case, the responsibility technically does rest with “everyone,” but we’ll get into roles and responsibilities next.

The First Meeting

You’ve assembled your team… now what? Not all the principles matter for your team, and they’re not all equally important right now.

So the question becomes: What’s happening in our product, team or company that makes one principle seem like the highest priority?

Your executive team might create an initial list of principles, and the working group ranks them. Or you might prioritize based on where AI poses the greatest risk. There’s no one right answer, but without guiding principles, it’s hard to focus effectively.

For example, if your guiding principle is human agency, what you measure and where you focus will be a bit different than if it’s fairness or inclusion. Both these principles matter now and in the future; it’s just choosing your focus so you can start the work.

Defining and Dividing the Work

Working on responsible AI often feels ambiguous, so accountability begins when each team member knows exactly how they can contribute. So, instead of job titles, you’ll break down the work into four areas: research, process, tools, and training.

If this is a company-wide effort, some roles logically fit certain types of work, like legal handling regulations, IT reviewing tools, or L&D leading training. But there’s no strict formula.

If you’re leading a product department or team effort, just lean into what people are already good at.

Here are the types of tasks for each group:

Research

Goal: Build a strong foundation of knowledge around AI ethics, risks, regulations and user sentiment to inform responsible decision-making.

Types of Tasks:

- Monitor regulations and policy: Identify current laws or upcoming policy changes that could affect your AI use.

- Understand user opinions: Conduct surveys or interviews to assess how customers or internal users feel about AI, and explore how AI is currently used and perceived.

- Track industry trends: Stay updated on publications, conferences and expert discussions around responsible AI.

- Summarize relevant research: Read and distill academic or industry reports related to AI ethics and governance.

- Collect case studies: Gather examples of successful or failed AI implementations to inform your own practices.

Process

Goal: Establish repeatable, transparent systems for evaluating, implementing and updating AI use within your team or organization.

Types of Tasks:

- Create workflows: Design step-by-step processes for how AI tools or models are evaluated and approved.

- Define decision criteria: Identify which ethical, legal and strategic factors should guide AI-related decisions.

- Document transparency: Build accessible documentation that clearly explains how decisions are made and why.

- Schedule reviews: Hold consistent check-ins or retrospectives to evaluate how AI is being used and whether adjustments are needed.

Tools

Goal: Select, manage and review AI tools to ensure they align with ethical standards, support team needs and stay compliant over time.

Types of Tasks:

- Set selection criteria: Define what makes a tool appropriate by considering ethics, legal requirements, usability and practicality.

- Meet with vendors: Collaborate with vendors or tool developers to ensure tools meet your responsible AI standards.

- Track performance: Provide regular reports on how well tools are functioning and whether they align with your ethical goals.

- Gather team feedback: Collect and apply feedback on tool usability, effectiveness and any ethical or functional concerns.

- Maintain inventory: Keep an updated list of AI tools in use, including their purpose, owners and compliance status.

Training

Goal: Build team awareness, confidence and shared responsibility around the ethical use of AI through ongoing education and support.

Types of Tasks:

- Assess understanding: Regularly evaluate how well your team understands responsible AI principles, and then collaborate with the tools team to identify training opportunities that support the application.

- Distribute resources: Share relevant training materials, courses, or learning opportunities across the team.

- Create a knowledge hub: Build an accessible library of responsible AI resources, including policies, tools, and reference materials.

- Promote broader awareness: Make a plan to embed responsible AI practices more widely, like through onboarding or cross-departmental projects.

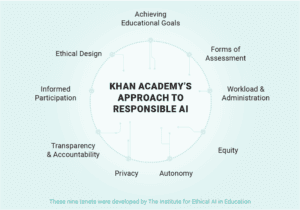

How Khan Academy Builds Responsible AI for Education

What if We Brought AI into The Classroom? For many people, that’s an uncomfortable thought. The stakes aren’t just profits or jobs, it’s students and their future.

Since 2005, Khan Academy has been helping students learn through accessible tutoring-style videos, so it makes sense they’d consider how AI might support their work. Rather than rushing to market, they’ve moved forward slowly with responsible AI as their top priority.

Khan Academy’s AI steering group includes educators, product managers, data scientists and legal experts. They’ve defined their principles and incorporated risk management guidelines from the National Institute of Standards and Technology. Their north star for development: achieving educational objectives.

For Khan, a successful AI solution wasn’t about giving students answers or inflating test scores. Instead, it is about helping students think through problems and check their understanding while providing teachers with an unprecedented view into student thought processes.

In a 60 Minutes episode, a teacher at one of the 266 pilot schools explained that reviewing how students use the tool on assignments helps her understand where students might be struggling. She said, “It gives me insight as a teacher on who I need to spend that one-on-one time with.” For students, as one child noted, it’s helpful for those who “aren’t comfortable asking questions in class.” And for Khan, it has helped them become the global leader in AI education.

The companies building trust with their customers, and delivering real value with their AI, aren’t the ones moving fastest, they’re the ones moving most thoughtfully. Whether you’re deploying AI in products or workflows, the question isn’t whether you can afford to prioritize responsible AI; it is whether you can afford not to. It’s whether you can afford not to. Start with one principle, assemble your working group, and begin the conversations that will define how your organization uses AI for the next decade.

Additional AI Resources for Product Professionals:

Stop Chasing AI Hype: Start Solving Market Problems

AI for Product Managers: How to Learn and Grow your AI Skills

Helping Product Leaders Drive AI Transformation (Podcast)

AI Training for Product Professionals

Author

-

Paul Young, an Executive Leader with 26 years of expertise in Product Management and Marketing, has navigated impactful roles at TeleNetwork, Cisco Systems, Dell, and more. Notably, he contributed to NetStreams, The Fans Zone, and Pragmatic Institute. Paul's strategic acumen has left an imprint in the tech industry. For questions or inquiries, please contact [email protected].

View all posts