By Don Fox

This article was originally published on January 24, 2018, on The Data Incubator.

Working on large data science projects usually involves the user accessing, manipulating, and retrieving data on a server. Next, the workflow moves client-side where the user will apply more refined data analysis and processing, typically tasks not possible or too clumsy to be done on the server. SQL (Structured Query Language) is ubiquitous in industry and data scientists will have to use it in their work to access data on the server.

The line between what data manipulation should be done server-side using SQL or on the client-side using a language like Python is not clear. Further, people who are either uncomfortable or dislike using SQL may be tempted to keep server-side manipulation to a minimum and reserve more of those actions on the client-side. With powerful and popular Python libraries for data wrangling and manipulation, the temptation to keep server-side processing to a minimum has increased.

This article will compare the execution time for several typical data manipulation tasks such as join and group by using PostgreSQL and pandas. PostgreSQL, often shortened as Postgres, is an object-relational database management system. It is free and open-source and runs on all major operating systems. Pandas is a Python data manipulation library that offers data structures akin to Excel spreadsheets and SQL tables and functions for manipulating those data structures.

The performance will be measured for both tools for the following actions:

- select columns

- filter rows

- group by and aggregation

- load a large CSV file

- join two tables

How these tasks scale as a function of table size will be explored by running the analysis with datasets with ten to ten million rows. These datasets are stored as CSV files and have four columns; the entries of the first two columns are floats, the third are strings, while the last are integers representing a unique id. For joining two tables, a second dataset is used, a column of integer ids and a column of floats.

For each of the five tasks listed above, the benchmark test was run for one hundred replicates for each dataset size. The Postgres part of the benchmark was performed using pgbench, a commandline program for running benchmark tests of Postgres. It can accept custom scripts containing SQL queries to perform the benchmark. The computer used for this study runs Ubuntu 16.04, with 16 GB of RAM, and an 8 core process at 1.8 GHz. The code used for this benchmark can be found on GitHub. The repository contains all the code to run the benchmark, the results as JSON files, and figures plotting the comparison of the two methods.

It is important for data scientists to know the limitations of their tools and what approaches are optimal in terms of time. Although smaller projects will not benefit a lot from speed up, small percentage gains in more data-intensive applications will translate into large absolute time savings.

Benchmark Results

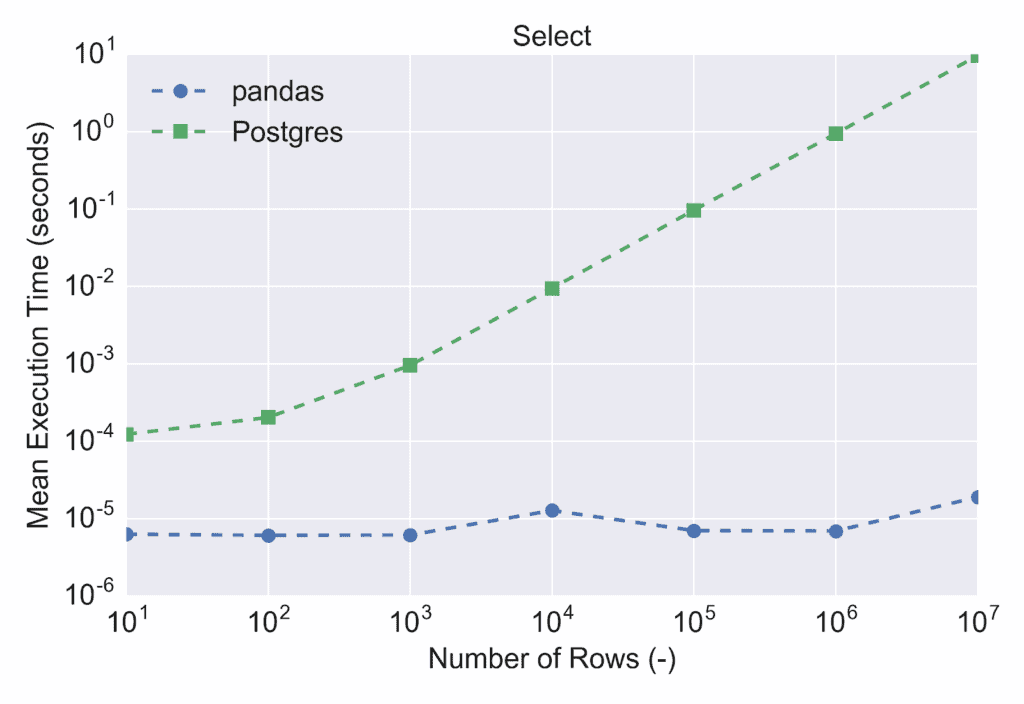

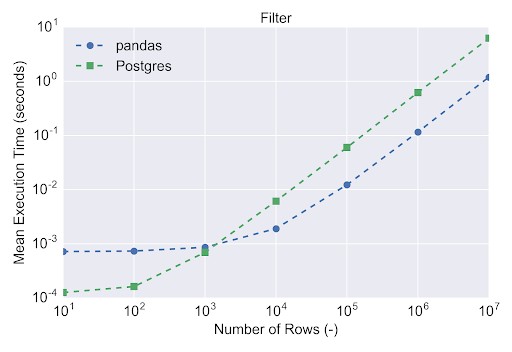

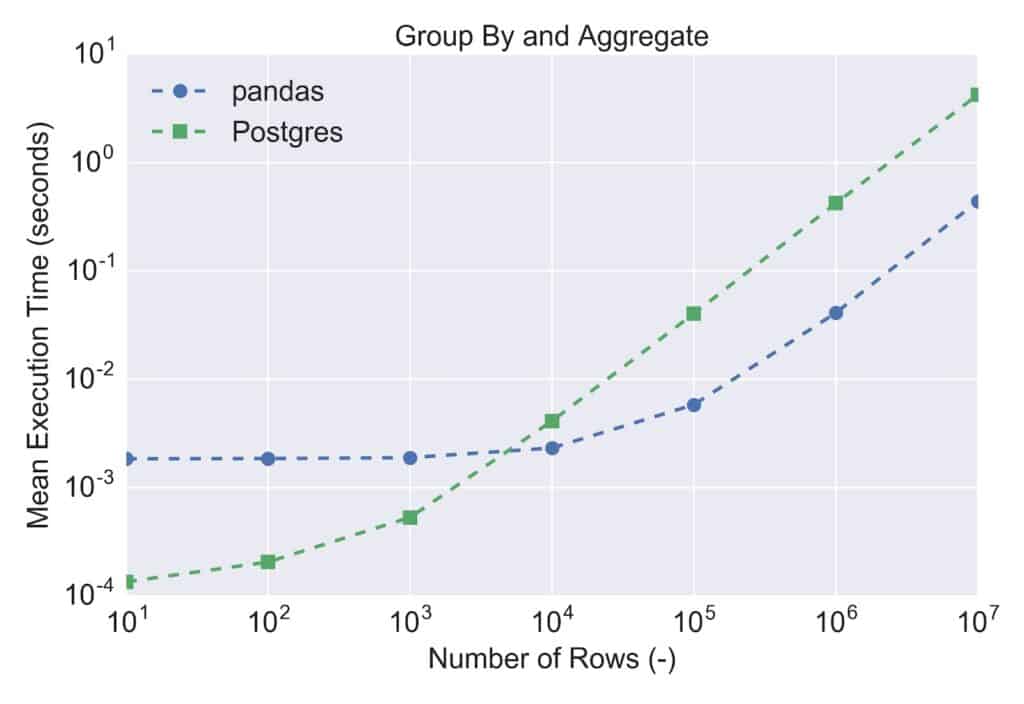

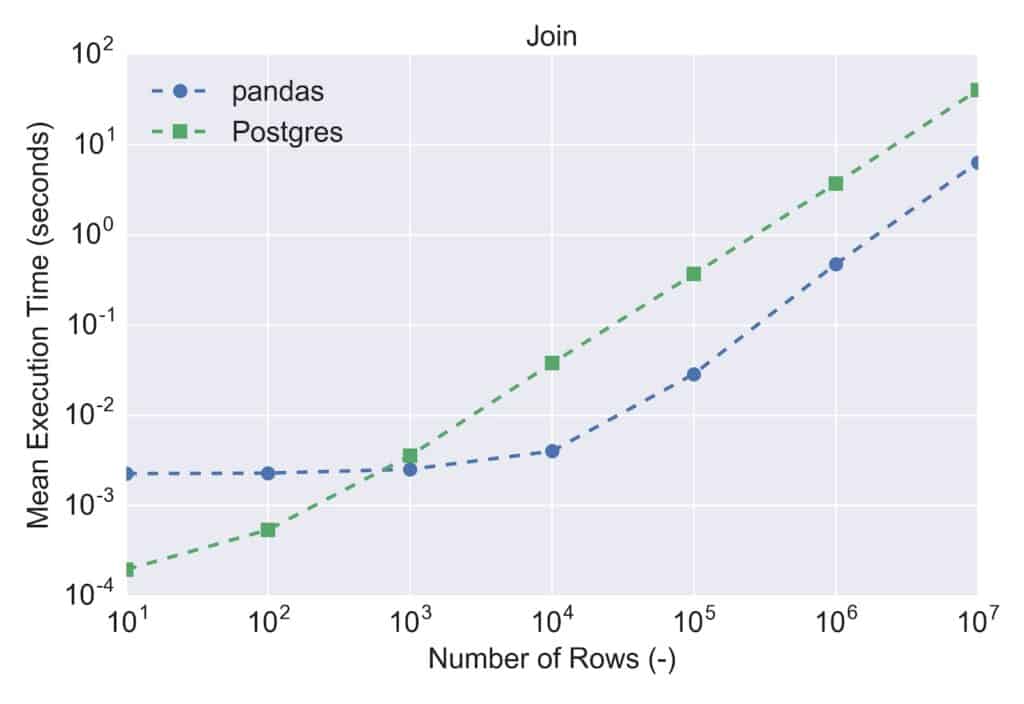

The benchmarking relied on two datasets, referred to as A and B. The headers and data types (in parenthesis) for the columns of dataset A are “score_1” (float), “score_2” (float), “id” (integer), and “section” (string)”. For dataset B, the headers and data types are “score_3” (float) and “id” (integer). The “id” column relates the two datasets together. The figures below show the mean execution time as a function of the number of rows in the datasets, using a log-log axis. Below each figure, a description of the task and the code used for each tool is provided.

For selecting columns, one column from the table/DataFrame was returned. The code for this task are:

pandas: df_A[‘score_1’]

Postgres: SELECT score_1 FROM test_table_A;

For filtering rows, a table/DataFrame was returned with only rows meeting a criterion. The code for this task are:

pandas: df_A[self.df_A[‘section’] == ‘A’]

Postgres: SELECT * FROM test_table_A WHERE section = ‘A’;

For grouping and applying aggregation functions, records are grouped by section and mean and maximum score are reported for the two scores. The code for this task are:

pandas: df_A.groupby(‘section’).agg({‘score_1’: ‘mean’, ‘score_2’: ‘max’})

Postgres: SELECT AVG(score_1), MAX(score_2)

FROM test_table_A

GROUP BY section;

For joining, the datasets are joined on datasets’ id and the resulting table/DataFrame is returned. The code for this task are:

pandas: df_A.merge(self.df_B, left_on=’id’, right_on=’id’)

Postgres: SELECT * FROM test_table_A

JOIN test_table_B on test_table_A.id = test_table_B.id;

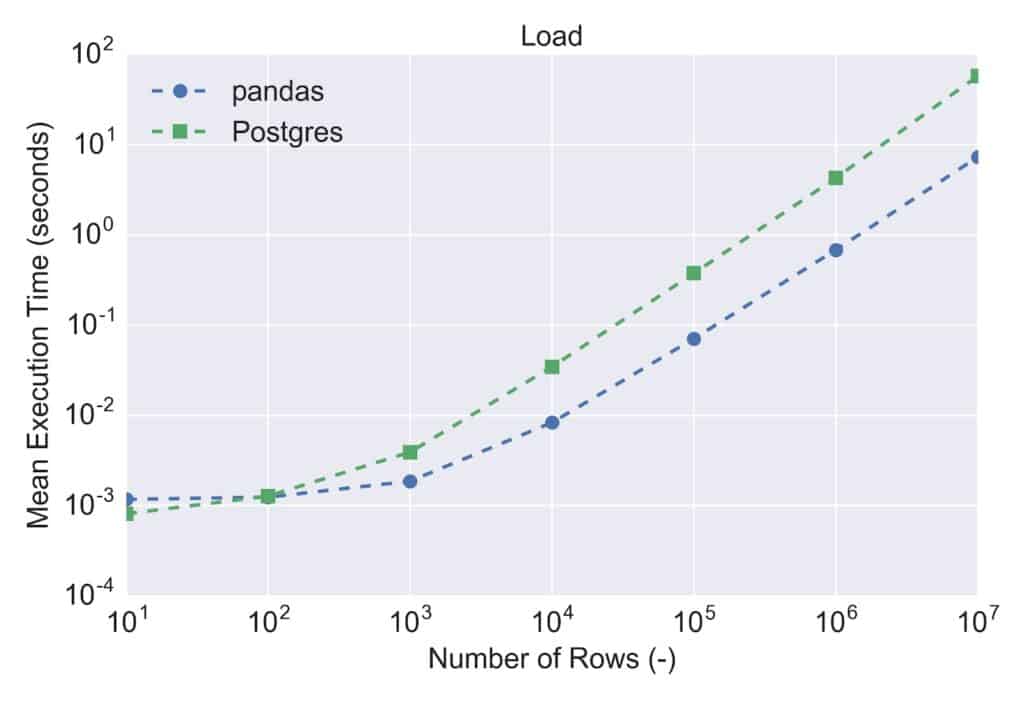

For loading a csv file, dataset A is loaded from disk to create a table/DataFrame. The code for this task are:

pandas: df_A = pd.read_csv(‘PATH_TO_CSV_FILE’, header=None, index_col=False, names=self.columns_A)

Postgres: COPY test_table_A FROM ‘PATH_TO_CSV_FILE’ WITH DELIMITER ‘,’;

Conclusions

Overall, pandas outperformed Postgres, often running over five to ten times faster for the larger datasets. The only cases when Postgres performed better were for smaller-sized datasets, typically less than a thousand rows. Selecting columns was very efficient in pandas, with an O(1) time complexity because the DataFrame is already stored in memory. In general, loading and joining were the tasks that took the longest, requiring times greater than a second for large datasets.

For dataset sizes investigated, pandas is the better tool for the data analysis tasks studied. However, pandas does have its limitations and there is still a need for SQL. For pandas, the data is stored in memory and it will be difficult loading a CSV file greater than half of the system’s memory. For the ten-thousand row dataset, the file size was about 400 MB, but the dataset only had four columns. Datasets often contain hundreds of columns, resulting in file sizes on the order of 10 GB when the dataset has over a million rows.

Postgres and pandas are ultimately different tools with overlapping functionality. Postgres and other SQL-based languages were created to manage databases and offer users a convenient way to access and retrieve data, especially across multiple tables. The server running Postgres would have all the datasets stored as tables across the system, and it would be impractical for a user to transfer the required tables to their system and use pandas to perform tasks such as join and group by client side. Pandas was created for data manipulation and its strength lies in complex data analysis operations. One should not view pandas and Postgres as competing entities but rather important tools making up the Data Science computational stack.