In the first white paper of this series, “Making the Leap from AI Investments to Business Results,” I discussed a holistic approach to designing AI solutions and driving value for your customers and shareholders.

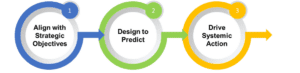

Here’s the framework used to secure return on investment from your AI projects:

1. Align with Strategic Objectives – Before investing in any AI projects, start with the business objectives. Work your way to the AI projects or use cases that provide insights to accelerate achieving the strategic objectives for the company. This approach ensures that you are investing in the right AI projects for the company from the start.

2. Design to Predict – Before you build out the analytics model, thoroughly validate the approach(es) so you don’t have to make costly course corrections down the road. Build the models to predict the outcomes you’re looking for. Plan early to build the visualization of the model insights for the audiences that need to take an action.

3. Drive Systemic Action – Insight without any action is a wasted effort. Plan on change management to secure buy-in from all levels within the organization. Refine business processes and align training, incentives, and performance management, so all relevant operations in the business are aligned to act on the insights.

Let’s dive deeper into the second element of the framework, “design to predict.” Why is this critical?

The most common reasons that AI implementations do not achieve their intended results include:

- Approach: Lack of clear strategy and understanding of what is achievable

- Design: Poor model design, data and governance issues and algorithm bias

- Implementation: Limited internal support for full implementation

- Trust: Lack of trust in the insights

- Action: Inability to translate insights to action

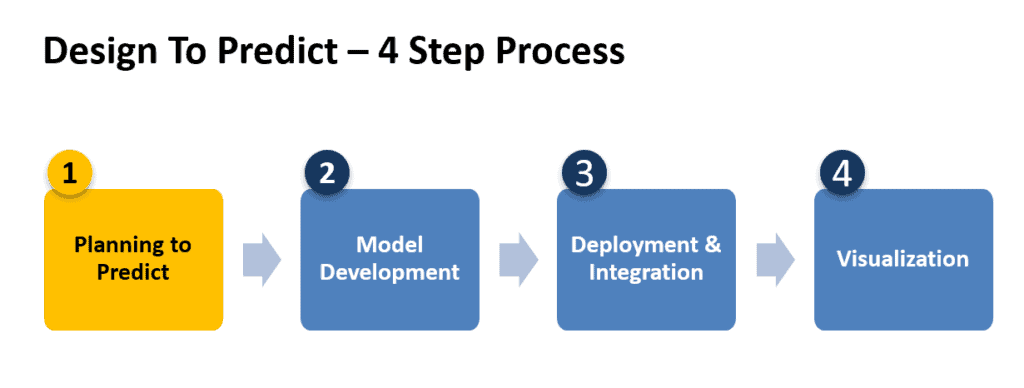

It is important to ensure that the model is aligned to achieve the business objectives and is designed to accurately predict the desired outcomes. Consider the following four-step process for design to predict:

1. Planning to Predict

Before delving into and investing significant time and money to build AI-based models, it is crucial to build a proof of concept, or “POC.” But how do you build an effective POC? The first step is to determine the overall objectives, the current process, what data is available and (most importantly) the ability of the available data to predict the desired outcomes.

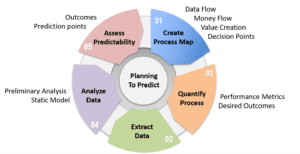

To that end, the planning to predict is a structured framework to determine the model’s viability before investing a significant amount of time in building the model(s). Here is the framework:

1.1 Create a Process Map

Develop a view of the current process outlining the data flow and money flow. The flow of money often provides insight into value creation in the process; however, you may need to create a view highlighting where value is created explicitly. Define key actions in the process and the critical decision points. This is important to prioritize the predictions to support the decision points in the process. Most of this information can be derived through interviews of key personnel and reviews of existing materials.

1.2 Quantify the Process

Develop a quantified view of the process by identifying key performance metrics at each critical decision point. Determine both the current metrics and desired metrics. Desired metrics can be based on available benchmarks wherever possible. Craft the desired outcomes around strategic objectives.

1.3 Extract Data

Collect relevant and representative data across the process map. Consider both structured and unstructured data. Data needs to be cleansed and transformed into the required formats and structure suitable for analysis. Consider the following sources of data:

- Data within organizational systems readily accessible (e.g., CRM, databases, etc.)

- Data within the organization not readily accessible (e.g., PDFs, pictures, surveys, etc.)

- Data outside the organization or publicly available data (e.g., average income levels by zip code available from data published by the IRS) —This data could be used to augment data within the organization to provide richer insights.

1.4 Analyze Data

Conduct a preliminary and manual analysis, considering that this is towards a POC. In this preliminary step, this analysis is considered “manual” as the data is not yet linked to the source systems.

It is essentially a static model where the data from the previous step is analyzed across potential AI models to determine predicted outcomes. Analysis tools used at this stage could be Microsoft Excel or a statistical software suite such as IBM’s SPSS, JMP, SAS, MATLAB, etc. Use multiple analysis models to determine the models that best fit your use case.

1.05 Assess Predictability

Determine the list of data fields and variables that represent the critical decision points and desired outcomes. For each model, assess the degree to which the data predicts the outcomes. Based on this, you should prioritize the AI models and the data elements that best predict the desired outcomes.

By planning for repeatability early in the process, companies can save money and time by implementing a solution designed for scalability from the ground up.

Based on the results from this step, you can review the process map, refine data collection and the analysis to improve the predictability of the data and the models to meet your needs.

An important point to consider in the planning to predict and subsequent model development steps is “repeatability.” Often, when the POC is deemed a success, companies accelerate efforts to build the production models and systems too quickly without paying sufficient attention to the ability of the solution design to scale.

By planning for repeatability early in the process, companies can save money and time by implementing a solution designed for scalability from the ground up.

2. Model Development

Several standard AI models can be used to identify patterns to predict outcomes. Models include Linear Regression, Deep Neural Networks, Bayesian Algorithms, etc. After the planning to predict step, you should have a good idea of the analytical model that best represents your particular use case and the likely predictors.

Set aside a portion of the data to conduct a blind test of the model(s) and utilize the remaining data to train the model(s). Develop the selected model(s) using a standard coding platform such as Python.

Test the model with the test data and refine the configurations to improve prediction accuracy. Ideally, define an API (Application Programming Interface) for the model to simplify integration and future upgrades.

Ensure the models are designed with attention to data and governance requirements while incorporating safeguards to avoid algorithm or design bias.

3. Deployment and Integration

Build and integrate the model into the production systems as appropriate. Design and implement the code to extract and transform the required data on a dynamic basis and integrate the model with the data sources.

You may need to integrate with other standard APIs to access data from CRM systems, IOT systems, phone systems, etc.

4. Visualization

Suppose you want to take action based on insights from the analytical models. In that case, you will need to provide visibility of relevant data and insights to the specific audiences who need to take action and/or be aware of the changes.

Define the data elements that need to be tracked and utilized to trigger actions. Data elements could include historical data, thresholds, alerts, data that would need to be actioned, etc.

Define the actions that need to be taken at each decision point and the owners across the organization. Design and implement the visualization dashboards organized by type of audience and owner.

The design to predict process will help improve your odds of success by planning effective models to identify patterns and uncover insights so you can take relevant actions.

* * *

Learn how Pragmatic Institute can train your data team to deliver critical insights that power business strategy.

Author

-

Harish Krishnamurthy is president at Sciata and has held leadership roles across P&L, sales, marketing and strategy during his tenure at IBM, Insight Enterprises and Spear Education.

View all posts